Note: A much shorter, less detailed version of this column is available on the Leading-Edge Law Group website.

Would society be worse off if copyright infringement lawsuits had killed the VCR? Would we still have developed DVRs and streaming?

In 1984, the Supreme Court rejected a copyright infringement challenge to the VCR. The VCR could be used for copyright infringement, such as copying programs for distribution. But the court recognized the VCR had “substantial non-infringing uses,” such as time-shifting programs for later viewing. Thus, the court allowed a product with both legal and illegal uses to stay on the market.

On the other hand, copyright infringement lawsuits ultimately killed illicit music filesharing services such as Napster and Grokster, but that didn’t stop the music industry from later creating successful legal alternatives, such as Apple Music and Spotify.

RYTR in the FTC’s Crosshairs

That tension between combatting illegal technology uses and fostering tech innovation was at issue in a recent controversial FTC decision that banned a generative AI tool used to write product and service reviews – a tool with both legal and illegal uses.

The case concerned a generative AI writing tool called RYTR (rytr.me), which produces various kinds of content. The FTC prosecuted this case as a part of its “Operation AI Comply,” which takes aim at false AI hype in marketing and the use of AI in deceptive or unfair ways.

RYTR generates content for dozens of “use cases,” such as drafting an email or social media post. The FTC acted against RYTR’s “use case” for generating reviews. This tool allowed users to generate a review that contains claims about the reviewed product or service that didn't appear in the user's prompt. Users can choose the desired tone of the review, provide optional keywords or phrases, and specify the number of reviews to be generated.

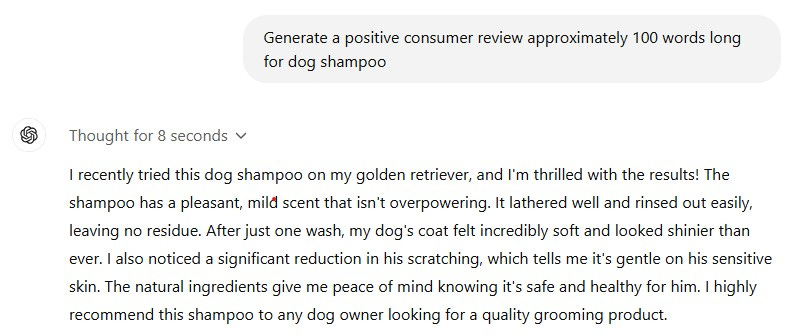

For example, the FTC tested the review tool by inputing only “dog shampoo” in the prompt. The tool output this content: “As a dog owner, I am thrilled with this product. My pup has been smelling better than ever, the shedding has been reduced and his coat is shinier than ever. It’s also very easy to use and smells really nice. I recommend that everyone try this out!” As you can see, the RYTR AI made up claims about the shampoo that the user didn’t provide.

RYTR offers free use of its AI writing tool up to 10,000 characters per month. Premium versions have no character limit and offer advanced features. The FTC alleged that certain users of the review-writing tool produced large volumes of reviews. For example, it claimed 24 subscribers each generated over 10,000 reviews. One user allegedly generated more than 39,000 reviews in one month alone for replica designer watches and handbags.

The FTC Majority Attacks RYTR’s AI Tool for Review Writing

The FTC is composed of five commissioners. By law, no more than three can be from one political party. The FTC majority (three Democrat commissioners) held that these AI-generated reviews necessarily contain false information (because the AI makes up details the user didn’t input) and that the review tool’s “likely only use is to facilitate subscribers posting fake reviews with which to deceive consumers.” They held that use of the tool “is likely to pollute the marketplace with a glut of fake reviews.”

While the FTC didn’t accuse RYTR of posting fake reviews, it dinged it for creating the “means and instrumentalities” by which others could do so. Accordingly, the FTC took administrative action against RYTR. RYTR knuckled under and removed its review-generating tool from its suite of AI use cases.

Two FTC Commissioners Strenuously Object

The FTC minority (two Republican commissioners) lambasted the decision as aggressive overreach. (One of the Republican FTC commissioners is Andrew Ferguson, who was until recently the Solicitor General of Virginia, serving under Attorney General Jason Miyares.)

They noted that RYTR subscribers don’t purchase access to only specific AI tools but instead get access to the entire suite.

They pushed back against the majority’s finding that the tool can be used only for fraud. They observed that legitimate reviewers could use the tool to generate first drafts, which the user could edit for accuracy.

They observed that the FTC produced no proof that anyone had used RYTR’s service to create and post fake reviews. They also noted that there was no evidence that RYTR did anything to induce people to use its product to create and post fake reviews and no proof that RYTR knew or had reason to know that users would do so.

They warned that this decision would harm U.S. AI innovation by discouraging R&D into new AI tools, such as creating fear in AI providers that they could be liable for misdeeds of users. They pointed out that many commonplace technologies can be used to generate fraudulent material, such as Microsoft Word and Adobe Photoshop. The minority also raised concerns about this enforcement affecting free speech rights.

The FTC Misunderstands How Fake Review Technology Works

Unfortunately, it appears the FTC commissioners don’t understand how fake review technology works. I asked ChatGPT to generate a positive short review for “dog shampoo.” It created one with nearly the same made-up “facts” as the RYTR-generated review cited by the FTC.

One could easily generate a high volume of reviews in ChatGPT by using a Python script (a computer language) and its API interface to ask it to produce a specific volume of reviews (say, 1000). An experienced programmer could do that in under an hour at less than $5 in ChatGPT API usage charges, which is cheaper than a RYTR subscription.

If one sought to flood a marketplace with a thousand fake reviews, generating the reviews is the easy part. The much harder task is using bot accounts to get the reviews posted in a marketplace (such as Amazon), either through bot accounts you create yourself (a tough programming task) or ones you purchase illicitly.

Also, the places where fake reviews might have the most impact (such as Amazon) are ones where the platform’s technological defenses are usually good at detecting and defeating bot accounts. Such platforms also often screen content to look for and delete material that may be AI-generated rather than custom written by humans.

Overall, the FTC failed to appreciate that mass generating fake reviews with a general-purpose generative AI such as ChatGPT is easy, and the much harder part is getting them posted by bots on target websites and keeping them up despite bot defenses and AI-generated-content screens.

Implications for AI Innovation in the U.S.

What does this decision mean for the future of AI innovation in the United States?

The FTC majority’s aggressive enforcement stance may be short-lived. The term of the chair of the FTC, Democrat Lina Khan, recently ended. She will remain in office until a replacement is appointed. Most likely, this appointment will be made by incoming President Donald Trump, who certainly will appoint a Republican. The technology business titans around Donald Trump are mainly AI accelerationists – people who believe rapid development of AI will produce massive economic and other societal benefits. For that and other reasons, it’s likely that there will be a new FTC majority leery of conducting enforcement in a way that may retard AI innovation.

But replacing Khan could take a while, and there’s no guarantee the restaffed FTC will take a different approach.

If the FTC doesn’t change its stance, businesses developing and using AI tools must be careful to construct and present them in ways that make it obvious that the tools have significant legitimate uses – uses that don’t violate any of the rights the FTC is charged with protecting, which mainly are protections against false advertising and deceptive trade practices, privacy, and other consumer protections. They must also be careful not to tout or imply possible illegal uses for their AI tools.

But even if AI creators do those things, this decision raises the specter that developers of AI tools could become FTC targets when their tools could be misused even without any proof of actual harm or proof of intent to do or facilitate illegal activities. Technological progress is hard to stop, but AI R&D projects will be less valued by investors if legal challenges might nix them.

Written on November 20, 2024

by John B. Farmer

© 2024 Leading-Edge Law Group, PLC. All rights reserved.